For optimize email campaign resultsthe subject of A/B testing is systematically proposed. Almost every tool has an A/B testing function, so why deprive yourself? What's more, once you understand how to use it, it's almost addictive (and fun too). On the other hand, there's one question that always comes up: "Marion, for an object test, we do take the opening rate, don't we? As it's a recurring question from our customers, it deserved a nice article on the subject!

A quick look at what A/B testing is

Definition: A/B testing allows you to test 2 variants of an email sent randomly to a portion of the target. Then, the version that performed better will be sent to the rest of the target.

First, let's unpack the terms of these two sentences and put our recos on them...

- " several variants "In fact, you can test EVERYTHING (and you MUST test everything): subject line, preheader, sender, button positioning, call to action wording, button color, image, design, features or benefits to be highlighted, way of announcing a price, placement of elements, time of sending... Be careful, however, to test only ONE variable at a time. If you are testing an object, change ONLY the object and not the preheader or the creative. You wouldn't know which variant really had an influence!

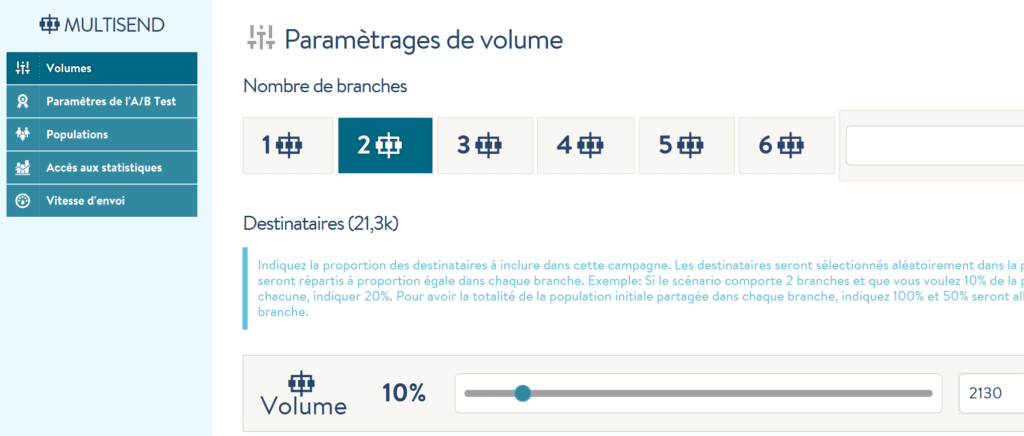

- " on a portion of the target "We usually take 10% from the contact base. In any case, we make sure that we have at least 1000 contacts in each segment. 1000 being a fairly representative number.

- " Then "We like to leave 24 hours, or at least 12 working hours (understand here: if I send an A/B test at 8am, then the winning version will ideally leave the next day at 8am or if we are in a hurry, the evening at 8pm)

- " the best performing "That's what often leads to a little debate!

Which performance indicator: open or click?

In the case of a subject line test, many people tend to take the open rate as an indicator of the performance of an A/B test campaign. Thinking, if it opens more, it clicks more so I'll get more conversions. Which is logical at first glance. Except that when you look closer at the results of the test campaigns, you often see that the number of clicks of version A can be higher than version B even though the number of openings of version A is lower than version B.

The objective of an email campaign is to get as many clicks as possible (excluding clicks on unsubscribe links of course! ). In this logic, we will rather take the number of clicks as an indicator for selecting the winning version!

Better yet, if you have the number of conversions reported in your email campaign management tool, you could choose this indicator as a selection indicator.

On the other hand, we agree, your newsletter sender name must be as stable and permanent as possible. So it's not an element of creativity. Whatever happens, it's on the object that you'll have to play to improve your opening performance.

Is A/B testing easy to set up?

YES, because you can find an A/B test module in almost all marketing campaign management tools.

On the other hand, here's a short list of things that can make the difference between one tool and another (so be sure to check these points when you're demonstrating them for a tender in the selection of a new campaign management tool eCRM. Oh yes, we can help you with that ;-))

- Manual or programmedSome tools (but they are very few) offer an A/B test module but manually... that is to say that you have to look at the performances of your 2 test campaigns yourself 24 hours later and choose the campaign that you think performed better. In short, the risk of forgetting and making mistakes is very high!

- Selection indicatorsThe opening rate and the click rate are very often used as indicators, but less often the conversion rate, the unsubscribe rate, the number of respondents to a survey... which can also be very useful for campaigns with specific objectives

- The number of testable versions Some tools limit the number of versions to be tested, others have no limit.

- The A/B TEST in a scenario Very few tools unfortunately offer the possibility to do A/B testing in scenarios...Yet, this would be a real competitive advantage.

- Design/ergonomics This is like everything else, the ergonomics is more or less user-friendly depending on the tools.

Integrate A/B testing into your mailing schedule

As we said above, we MUST test ALL the elements of an email. And there are many of them. The best and most structured way is to include these tests in your sending schedule.

In this way, you'll be able to keep track of what you've tested and what you've found to be successful. You'll be able to determine which content and visual compositions are best suited for your targets. This will make your decision making easier and allow you to create more and more effective email campaigns for your target. And there too, we can help you, we love to coach you !

2 réponses

Be careful to check that the segment portion is random.

The platforms I use use a default criterion (member seniority) and if you don't specify that you want a random sample, it's a guaranteed bias!

Good article Marion 🙂

A/B testing was an important part of the campaign improvement/optimization strategy.

was? yes, was...

With the advent of AI and deep learning, the misuse of A/B testing can be seen as an attempt to get past filters. Yes, this is what spammers do to counter some filtering, to have changing content, which your campaign will have to a lesser extent. So doing A/B tests will soon be seen, from the machine's point of view, as a practice of an unskilled spammer.

So A/B remains a tool to be used with circumspection and ALL versions must be well thought out beforehand to generate a minimum of behavioral data.

As a result, the days of asking the machine to decide for us, to save us the effort with our brain, are soon over.